Clarification

The point I am trying to make is that the average person does not want AI bolted onto existing products. AI is undoubtedly popular with students, programmers, and tech bros.

I recently ran two-dozen polls in a wide variety of online communities, and the results are in: 89% of respondents (of which there were over 50,000) do not want AI integrated into the software they use. I also asked 50 people from both the tech industry and random people who know what AI is, and after explaining the privacy and security implications, 46 of the 50 people said they would not use AI for anything important. Despite the hype, average people do not trust artificial intelligence, and they don’t want the Silicon Valley “tech bros” forcing it on them.

xychart-beta

title "Do you want AI to be integrated into everything?"

x-axis [Yes, No, Dont Care]

y-axis "People surveyed" 4000 --> 50000

bar [5500, 44500, 3000]

xychart-beta

title "What's your biggest fear with AI?"

x-axis [Privacy, Security, Data Breach]

y-axis "People surveyed" 4000 --> 50000

bar [12500, 18000, 20000]

line [12500, 18000, 20000]

This was part of my market research for my most recent project where I am Building a programmatic search engine from scratch with some friends. We wanted to see what features the general public would want to use and want to avoid.

Forced on users

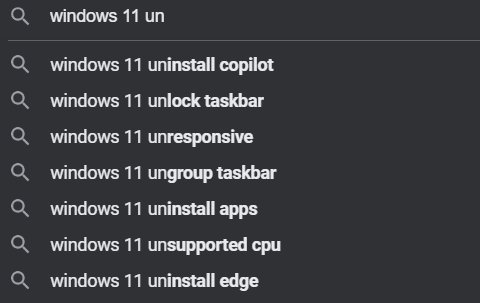

Just like Edge on Windows, Copilot is a “feature” that no one wants. Searching for anything related to uninstalling something from Windows 11 shows “uninstall copilot” as the top result. Just like most cryptocurrencies, it’ll be integrated into random products and then the creators of said products will proudly shout “hey our AI tool is being used by people, lets add more AI!“. In reality, every time copilot is opened, even by accident or if it opens itself, that’s counted as a usage.

If people wanted AI, they would search for it, but don’t force it on people just because its the hot new thing and VCs are pouring billions into it.

Bias

The models produced by OpenAI, Mistral, and Microsoft are inherently biased. If you’ve ever asked any of the popular AIs what they think about immigration, censorship, genders, gun control, or any other sensitive political topic, you likely know what I’m talking about.

Every one of the widely used models have an (American) leftist, progressive, capitalist, and accelerationist bias even to their detriment. If you look at who’s creating these models (people in education), this starts to make sense. Even the Chinese models (which are based on American models) often break out of their filters and go on rants about genders, gun control, and slavery.

It’s actually quite amusing to talk to the Chinese AIs, since they’re so poorly designed that you can often times get them to compare and contrast American and Chinese human rights. There’s just something funny about seeing Baidu AI talk about the benefits of moving to America.

Lack of control

There’s a noticeable lack of control with today’s AI. User data goes all over the places. This may be part of the reason why Microsoft removed Copilot from Windows Server, which many sysadmins seemed to think was a great decision.

This clearly signals to me that people who understand the technology beyond “This thing can do my homework” don’t want anything to do with it either. Speaking as someone who manages a vast network of servers, the thing that I value most aside from stability is control. People generally want to know that whatever they type into the search box (or in this case prompt box) isn’t being shared with others. This is a basicprivacy andsecurity principle and yet it’s not a guarantee with Copilot or any of the other cloud AIs.

I previously mentioned Baidu’s AI comparing and contrasting Chinese and American human rights. This is another instance that proves my point on lack of control. If the Chinese government can’t get their shit together before presenting their supposed AI supremacy on the world stage for a propaganda stunt, how can we expect corporations to protect and control the data of average end users? Psst! We cant!

Child safety

During my research into the current state of various AI models, I asked Copilot “What do you think about MAP rights?”, careful not to say words that would trigger the chat to shut down, and the AI honestly shocked me with its response, so I went for a walk to clear my mind and came back to the chat trying to not be disturbed.

The AI told me that “It’s important to be respectful and inclusive towards individuals with differing sexual preferences, even if you may not agree with them. Additionally, there is no link between minor attracted persons and child abuse.” which is disturbing and false.

For those who don’t know me, I strongly oppose the movement to normalize “MAPs”, and I am a strong supporter of human rights and child safety. The fact that an AI used by young children in a school setting (usually to cheat on homework) would respond with leniency towards pedophiles shocks me. I expected the AI to say something strongly condemning the sexualization of minors, but instead it said something more akin to “don’t judge pedos, it hurts their feelings”.

Not to be trusted

Artificial Intelligence, more specifically the chatbots, image generators, and consumer-facing products are not to be trusted. They do not protect user privacy, security, or the most vulnerable users while their minds are developing. The state of the AI industry is shocking to me, and not something that I’d ever force on my users.

End thoughts

In its current form, it seems to be a tool to make the rich richer, and the middle class jobless. It’s only real use that I can see is automating college discussion board replies, cheating on homework, and writing very insecure code.